Hello, I'm Sebastian Raschka, PhD

I'm an LLM Research Engineer with over a decade of experience in artificial intelligence. My work bridges academia and industry, with roles including senior staff at an AI company and a statistics professor.

My expertise lies in LLM research and the development of high-performance AI systems, with a deep focus on practical, code-driven implementations. (For my most up-to-date CV details, please visit my LinkedIn profile.)

Recent Notes and Blog Entries

The State of Reinforcement Learning for LLM Reasoning

Apr 19, 2025

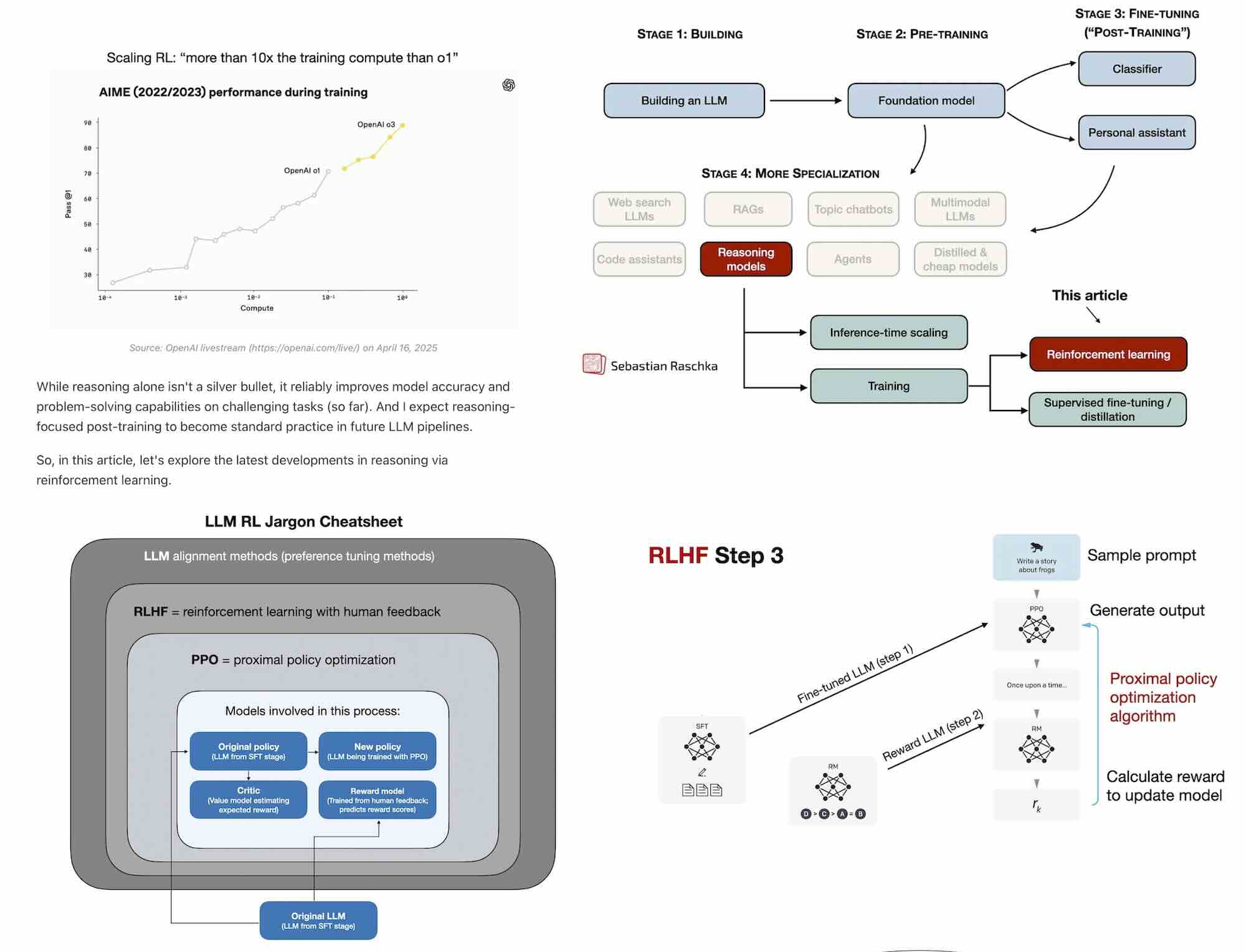

A lot has happened this month, especially with the releases of new flagship models like GPT-4.5 and Llama 4. But you might have noticed that reactions to these releases were relatively muted. Why? One reason could be that GPT-4.5 and Llama 4 remain conventional models, which means they were trained without explicit reinforcement learning for reasoning. However, OpenAI's recent release of the o3 reasoning model demonstrates there is still considerable room for improvement when investing compute strategically, specifically via reinforcement learning methods tailored for reasoning tasks. While reasoning alone isn't a silver bullet, it reliably improves model accuracy and problem-solving capabilities on challenging tasks (so far). And I expect reasoning-focused post-training to become standard practice in future LLM pipelines. So, in this article, let's explore the latest developments in reasoning via reinforcement learning.

First Look at Reasoning From Scratch: Chapter 1

Mar 29, 2025

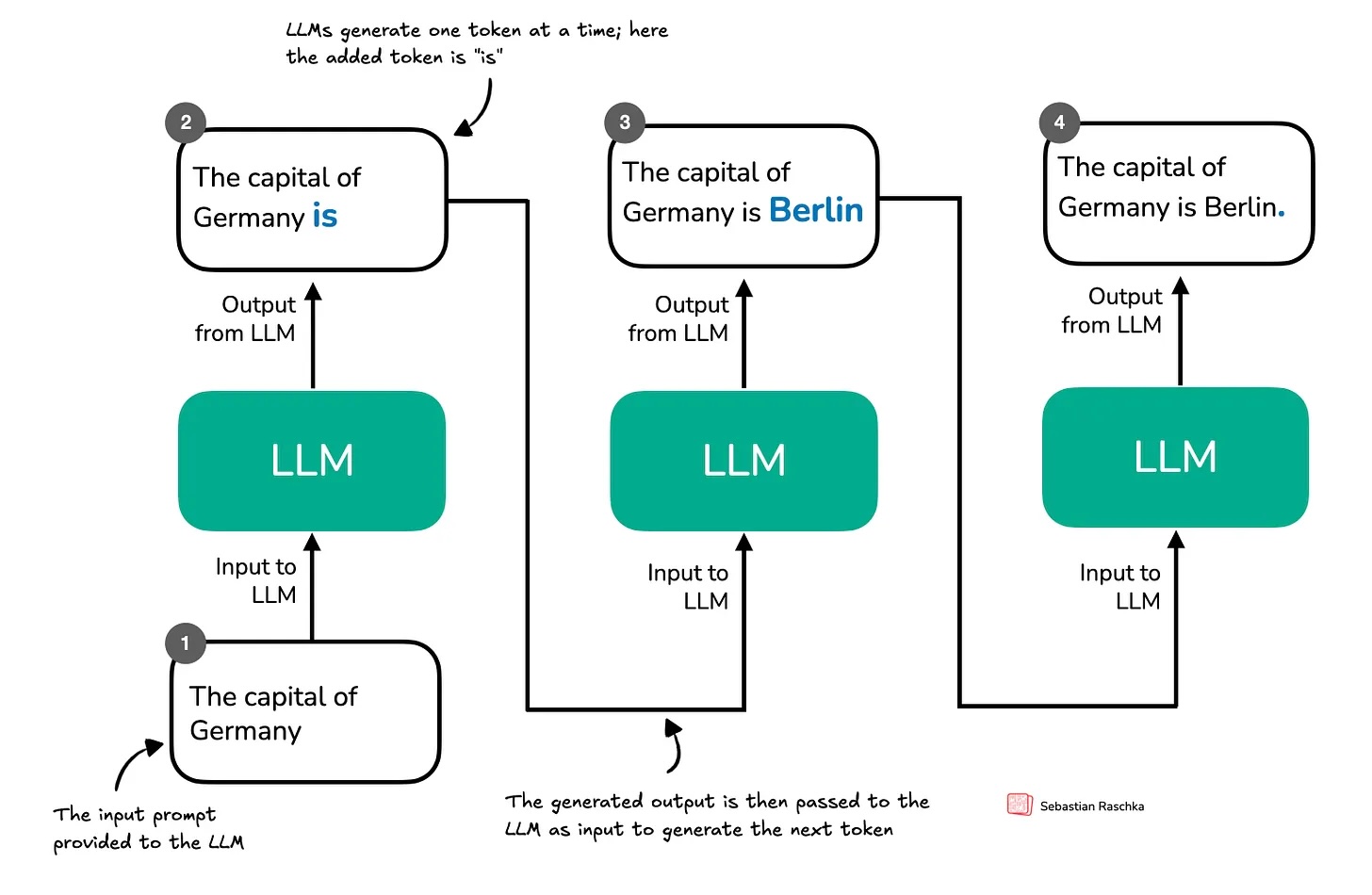

As you know, I've been writing a lot lately about the latest research on reasoning in LLMs. Before my next research-focused blog post, I wanted to offer something special to my paid subscribers as a thank-you for your ongoing support. So, I've started writing a new book on how reasoning works in LLMs, and here I'm sharing the first Chapter 1 with you. This ~15-page chapter is an introduction reasoning in the context of LLMs and provides an overview of methods like inference-time scaling and reinforcement learning. Thanks for your support! I hope you enjoy the chapter, and stay tuned for my next blog post on reasoning research!

Inference-Time Compute Scaling Methods to Improve Reasoning Models

Mar 8, 2025

This article explores recent research advancements in reasoning-optimized LLMs, with a particular focus on inference-time compute scaling that have emerged since the release of DeepSeek R1.

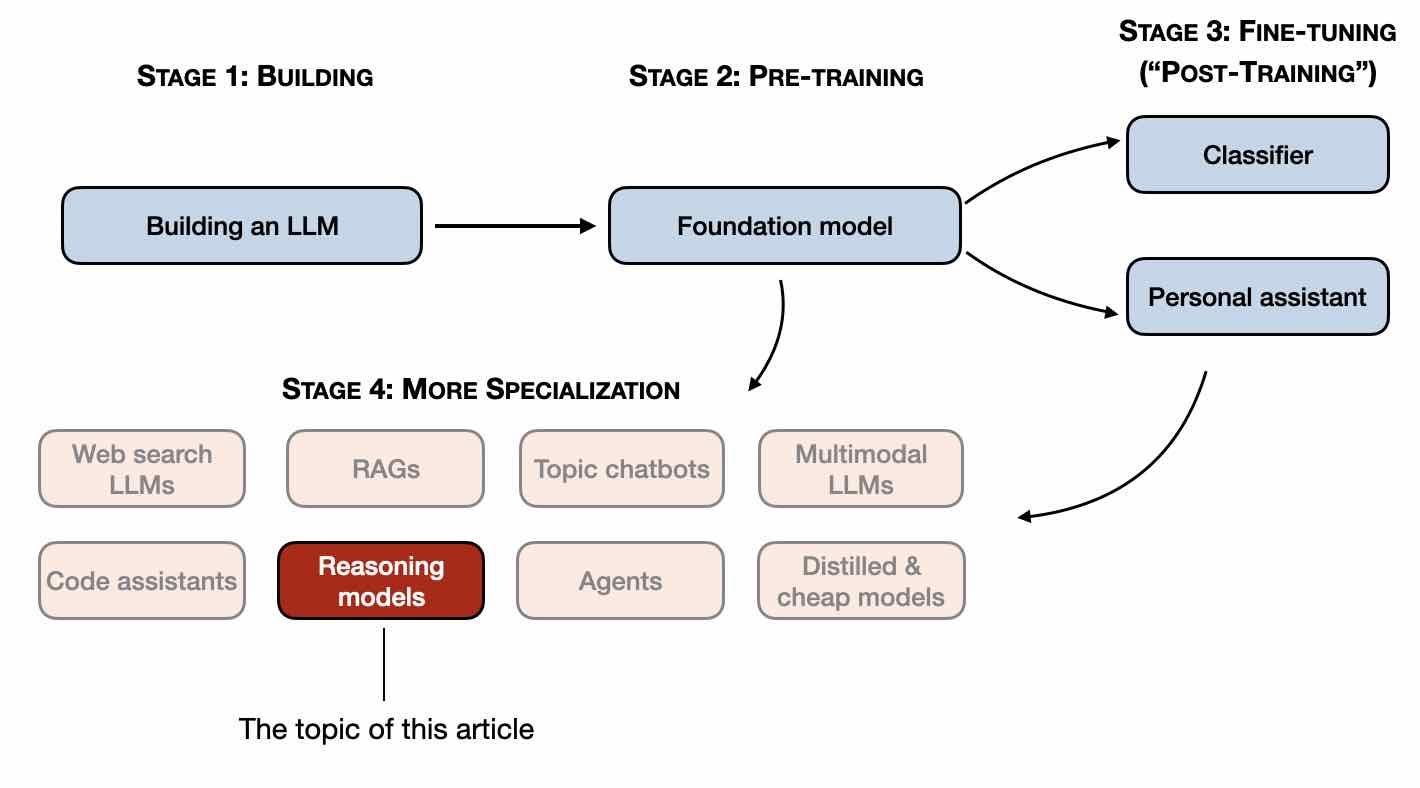

Understanding Reasoning LLMs

Feb 5, 2025

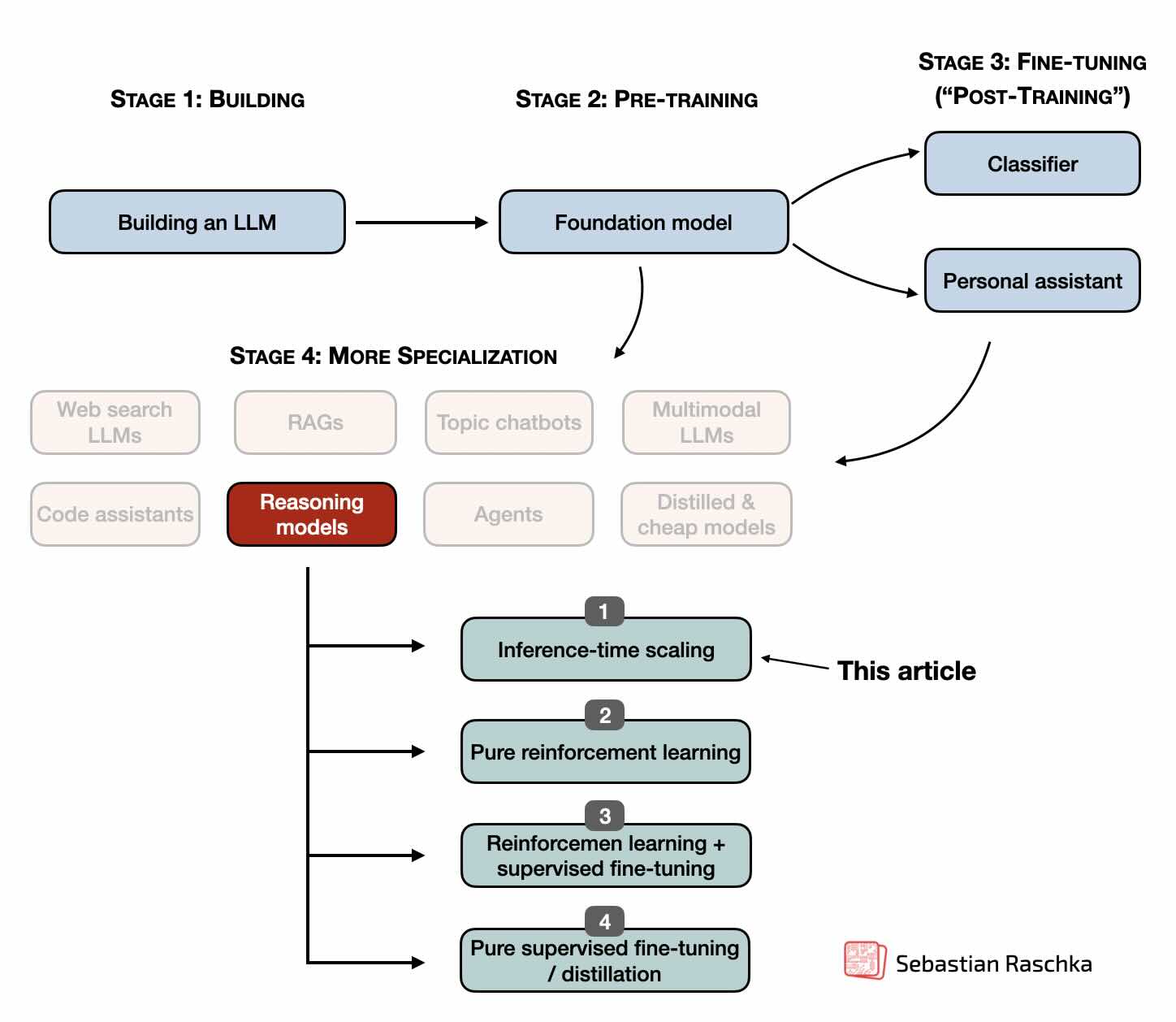

In this article, I will describe the four main approaches to building reasoning models, or how we can enhance LLMs with reasoning capabilities. I hope this provides valuable insights and helps you navigate the rapidly evolving literature and hype surrounding this topic.

Noteworthy LLM Research Papers of 2024

Jan 23, 2025

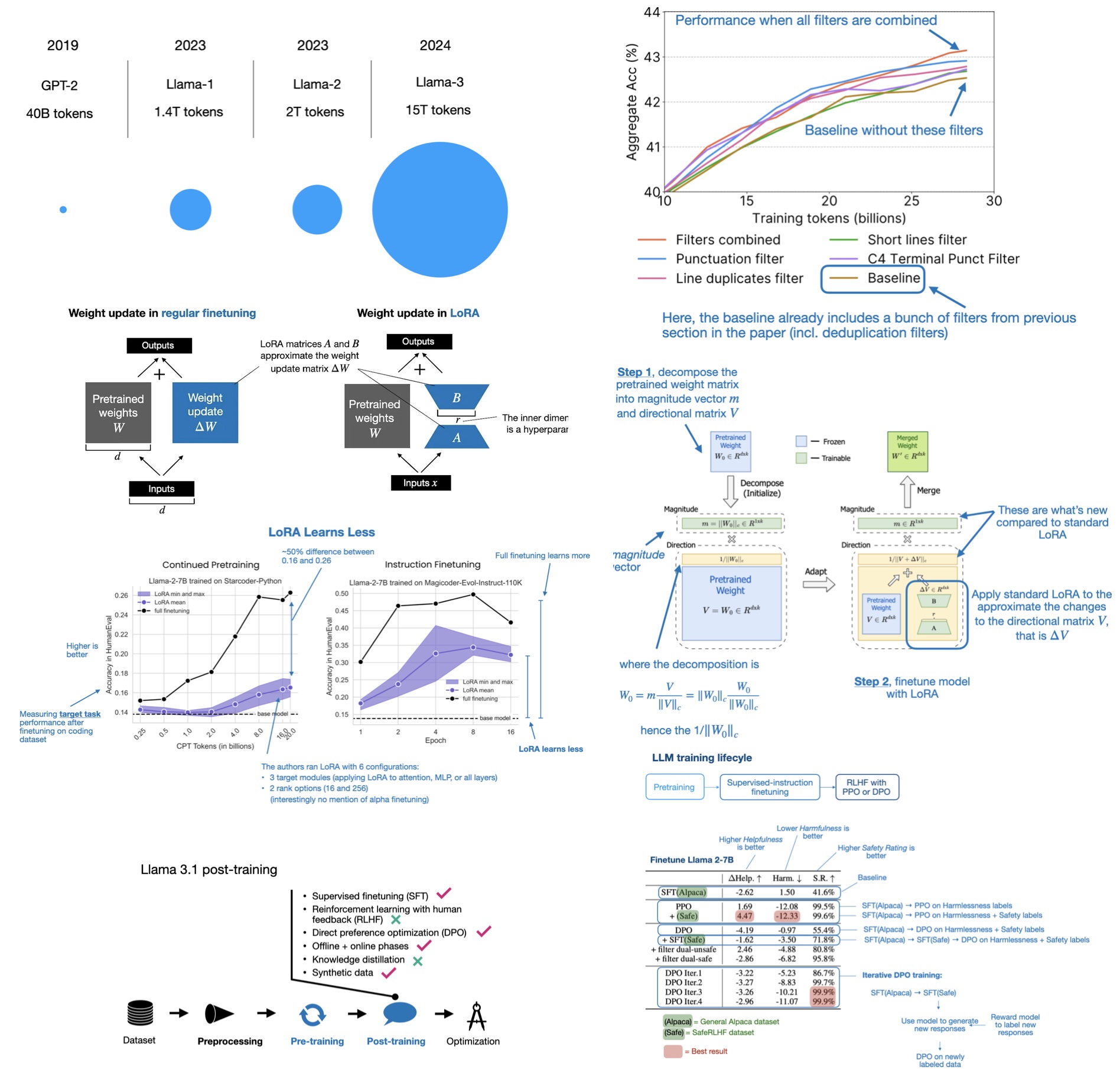

This article covers 12 influential AI research papers of 2024, ranging from mixture-of-experts models to new LLM scaling laws for precision.